Meta drops fact-checking, loosens its content moderation rules

Meta is ending its third-party fact checking program, moving to a Community Notes model over the coming months. Community Notes is what sites like X.com use

Tech Crunch

CALIFORNIA, 7 JAN

Meta, the parent of Facebook, Instagram and Whatsapp, on

Tuesday announced a major overhaul of its content moderation policies, taking

off some guardrails that it had put in place over several years, in response to

criticism that it had helped spread political and health misinformation.

In a blog post called “More speech, fewer mistakes”, Meta’s new chief global affairs officer, Joel Kaplan, outlined changes in three key areas to “undo the mission creep,” as he put it:

Meta is ending its third-party fact checking program, moving

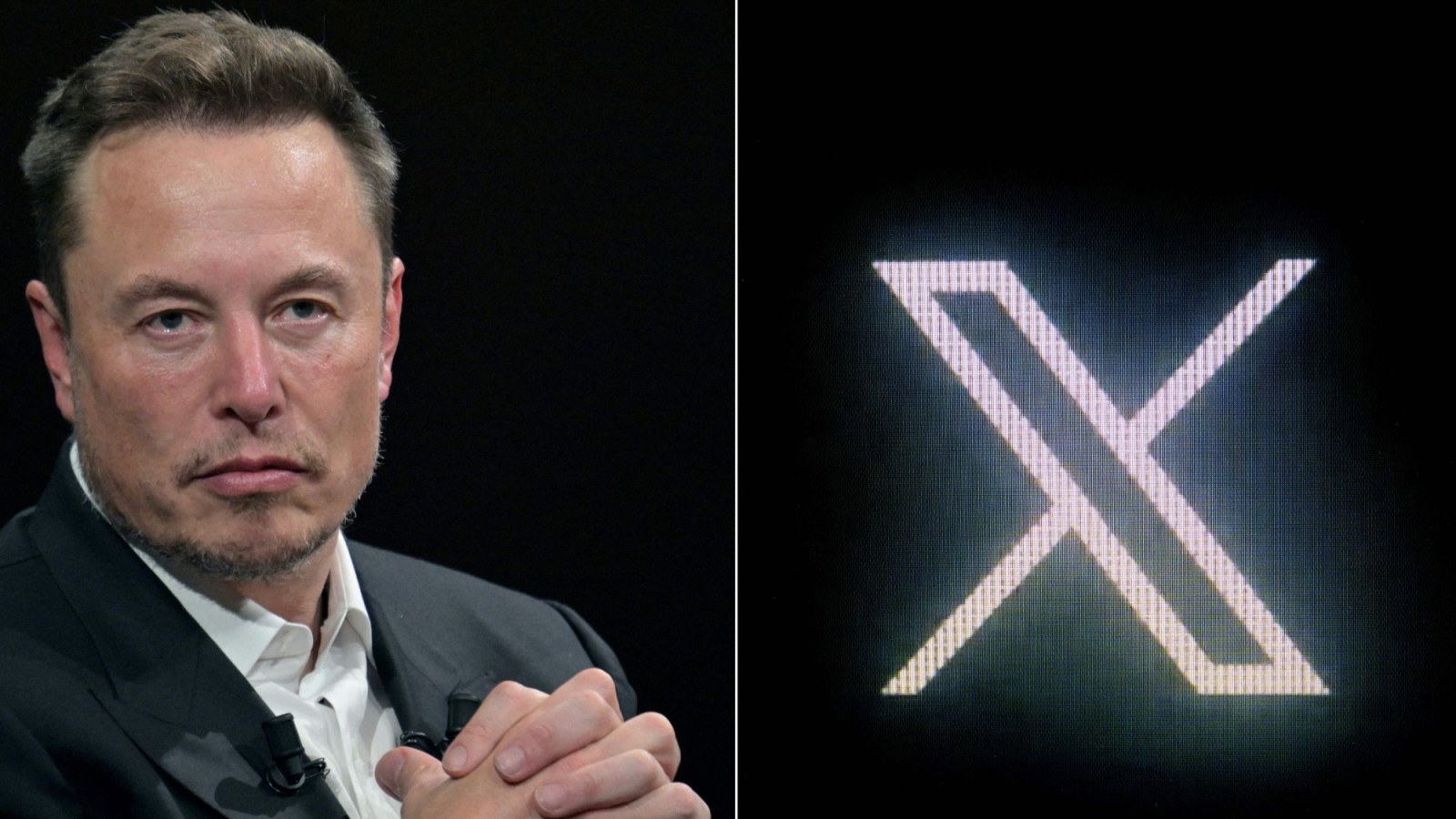

to a Community Notes model over the coming months. Community Notes is what

sites like X.com use.

It is lifting restrictions around “topics that are part of

mainstream discourse,” instead focusing enforcement on “illegal and

high-severity violations” in areas like terrorism, child sexual exploitation,

drugs, fraud and scams.

Users will be encouraged to take a “personalized” approach

to political content, making way for considerably more opinion and slant in

people’s feeds that fits whatever they want to see. Yes, Meta is leaning into

letting you create the echo chamber you’ve always wanted.

The moves are significant in part because they precede a new

presidential administration in the US taking charge later this month. Donald

Trump and his supporters have signalled their interpretation of free speech to

be significantly more focused on encouraging a much wider set of opinions.

Facebook has been in the crosshairs of their criticism

throughout the past few years, not least because at one point one of the people

it banned from its platforms in the name of content moderation was Trump

himself.

Meta’s content moderation provisions were put in place and

honed over a number of years following political and public criticism of how it

helped spread election misinformation, bad advice on Covid-19, and other

controversies. The fact checking, for example, was first developed in 2016,

with Meta working with third-party organizations on the heels of accusations

that Facebook was being weaponised to spread fake news during the U.S.

presidential election.

This eventually also led to the formation of an Oversight

Committee, more moderation, and other levers to help people control what

content they saw, and to alert Meta when they believed it was toxic or

misleading.

But these policies have not sat well with everyone. Some critics argue that the policies are not strong enough, while others believe they lead to too many mistakes, and others say the controls are too politically biased.

Leave a Reply

Your email address will not be published. Required fields are marked *

.png)

.png)

.png)